Imagine your company just secured $500,000 to build an AI solution that will transform your customer service operations. The data science consultants are pitching “deep learning neural networks with transformer architecture.” It sounds impressive, but you’re wondering: do we actually need that level of complexity, or would a simpler machine learning approach deliver results faster and cheaper?

This scenario plays out daily in boardrooms worldwide, and the stakes are high. More than 80% of AI projects fail, twice the failure rate of non-AI IT projects. The difference between machine learning vs deep learning isn’t just technical jargon — it’s the difference between a $50,000 solution deployed in 8 weeks and a $500,000 project that takes 9 months. Choose wrong, and your project risks joining that 80%.

Yet the opportunity is massive. Global enterprises are already committing significant budgets to AI — Gartner projects AI spending to exceed $2 trillion by 2026. At the same time, the deep learning market alone is forecast to expand from about $34 billion in 2025 to $279.6 billion by 2032, driven by demand for vision, speech, and language models.

This guide cuts through the buzzwords and shows you what machine learning and deep learning actually do, why they matter for business outcomes, and how to choose and implement the right approach.

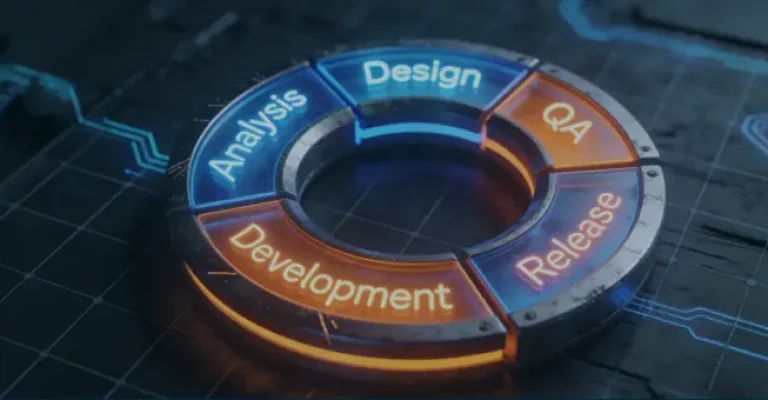

The AI family tree: from artificial intelligence to deep learning

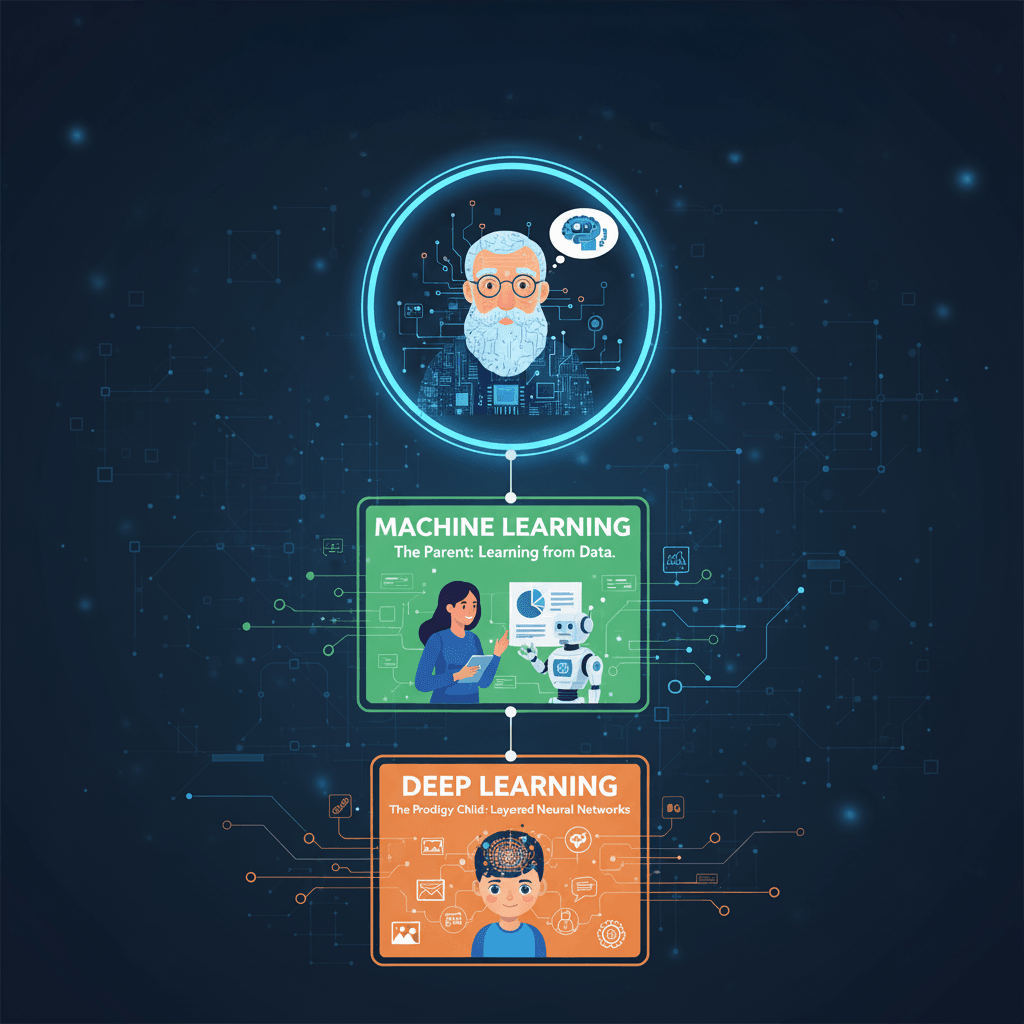

When a vendor tells you they’re using “AI,” what does that actually mean? The terms artificial intelligence, machine learning, and deep learning are often used interchangeably; however, they represent fundamentally different technology categories, each with distinct costs, capabilities, and business applications. Think of it like a family tree where each generation gets more specialized.

Think of AI as the grandparent of the family — the broad, overarching discipline focused on making machines think and act like humans. Within AI lives ML — the parent, responsible for teaching computers to learn from data rather than rigid programming. And then there’s DL — the prodigy child, a specialized branch of ML that mimics how the human brain processes complex information through layered neural networks.

Each step down this chain represents a jump in sophistication, data dependency, and autonomy.

| Level | Description | Example capabilities |

| Artificial intelligence (AI) | A broad field focused on simulating human intelligence | Rule-based systems, expert systems, robotics |

| Machine learning (ML) | A subset of AI where systems learn patterns from structured data | Forecasting, classification, anomaly detection |

| Deep learning (DL) | A subset of ML that uses neural networks to process unstructured data (images, speech, text) | Image recognition, voice assistants, and large language models (LLMs) |

All three are parts of one continuum, not competitors. As you move from AI to ML to DL, systems require:

- Less human intervention (they learn features automatically)

- More data and computing power

- Greater ability to handle complexity and unstructured information

Understanding this hierarchy helps business leaders align their goals with the right level of intelligence — instead of over-engineering when a simpler model could do the job.

ML vs DL comparison: how they work and differ

At their core, both machine learning (ML) and deep learning (DL) teach computers to recognize patterns and make predictions. The difference lies in how they learn, what they need to learn effectively, and how much human guidance they require along the way.

Machine learning models are like talented apprentices — they learn from examples, but need you to define what matters. Deep learning models, in contrast, are more like independent researchers — they discover meaningful patterns on their own, but demand vast amounts of data and computational power to get there.

| Aspect | Machine Learning (ML) | Deep learning (DL) |

| Data type | Structured (tables, spreadsheets, sensor logs) | Unstructured (images, video, speech, text) |

| Learning approach | Requires manual feature engineering — humans decide which data points are important | Learns automatically through neural networks with minimal human input |

| Data requirement | Performs well with moderate datasets | Requires massive labeled datasets for effective training. |

| Interpretability | High — models can be explained and audited easily | Low — often a “black box” with limited transparency |

| Hardware needs | Works efficiently on CPUs | Needs GPUs/TPUs for high-speed parallel computation |

| Development speed | Typically faster to prototype (weeks) | Longer training and tuning cycles (months) |

| Typical tools | scikit-learn, XGBoost, LightGBM | TensorFlow, PyTorch, Keras |

| Cost | Lower — fewer compute and data needs | Higher — intensive compute and storage demands |

When deciding between ML and DL, think of it as a trade-off between control and capability.

- If you need speed, interpretability, and structured insights, machine learning offers clarity and agility.

- If your challenge involves complex, unstructured data (like customer conversations, images, or video streams), deep learning’s autonomy and power pay off — despite higher resource costs.

The smartest organizations don’t view ML and DL as rivals but as complementary tools in the AI toolkit — each chosen based on problem type, data availability, and business urgency.

Business applications and impact

Machine learning

Machine learning delivers measurable business value across industries by automating decision-making, improving prediction accuracy, and optimizing operations. Understanding specific ML applications and their quantified outcomes helps justify investments and set realistic expectations.

1. Fraud detection and risk management

Financial institutions deploy machine learning to identify fraudulent transactions in real-time, analyzing patterns across millions of data points to flag suspicious activity with minimal false positives.

The U.S. Treasury Department announced that machine learning AI prevented and recovered over $4 billion in fraud and improper payments in fiscal year 2024, a dramatic increase from $652.7 million in FY23. This 513% improvement demonstrates the transformative impact of ML-based fraud detection at scale.

Potential business impact:

- Real-time transaction analysis for identification of suspicious patterns

- Reduction in false positives, decreasing customer friction

- Decrease in investigation time through automated flagging

- Continuous learning for adaptation to new fraud patterns

2. Demand forecasting and inventory optimization

Machine learning algorithms analyze historical sales data, seasonal patterns, market trends, and external factors to predict future demand with significantly greater accuracy than traditional statistical methods.

Potential business impact:

- Reduction in inventory carrying costs through optimized stock levels

- Decrease in stockout situations, improving customer satisfaction

- Lower working capital requirements through precision forecasting

- Greater forecast accuracy, enabling more confident business planning

Retailers implementing ML-based demand forecasting across multiple locations reduce excess inventory while improving product availability. Solutions process point-of-sale data, weather patterns, local events, and competitive pricing to generate location-level predictions updated daily.

3. Customer churn prediction and retention

Machine learning models identify customers likely to cancel services or switch to competitors by analyzing usage patterns, support interactions, payment history, and engagement metrics. This enables proactive retention strategies targeting high-risk customers.

Potential business impact:

- Churn rate reduction through early intervention

- Increase in customer lifetime value through improved retention

- Greater retention program efficiency through targeted outreach

- Revenue protection through the prevention of customer cancellations

Telecommunications and subscription service companies implement gradient boosting models analyzing customer attributes to predict churn probability. Solutions identify at-risk customers 60-90 days before cancellation, triggering personalized retention offers and proactive engagement.

4. Predictive maintenance in manufacturing

Machine learning algorithms analyze sensor data from industrial equipment to predict failures before they occur, enabling scheduled maintenance that prevents costly downtime.

Potential business impact:

- Reduction in unplanned downtime through failure prediction

- Decrease in maintenance costs by targeting actual needs

- Extension of equipment lifespan through optimal care

- Improvement in production efficiency through the prevention of interruptions

Manufacturing facilities deploy ML models analyzing vibration, temperature, pressure, and operational data from machinery. Systems predict equipment failures days in advance, enabling scheduled maintenance during planned downtime rather than emergency repairs during production runs.

5. Customer segmentation for marketing

Unsupervised machine learning algorithms identify natural customer groupings based on behavior, preferences, demographics, and purchase patterns, enabling targeted marketing strategies.

Potential business impact:

- Increase in marketing campaign effectiveness through personalization

- Reduction in customer acquisition cost through improved targeting

- Improvement in email engagement through relevant messaging

- Increase in conversion rates through segment-specific approaches

E-commerce platforms use K-means clustering to segment customers into distinct groups based on browsing behavior, purchase frequency, average order value, and product preferences. Personalized campaigns for each segment achieve higher conversion rates compared to generic messaging.

Deep learning

Deep learning transforms business capabilities in domains involving unstructured data, complex pattern recognition, and scenarios where traditional approaches prove inadequate. Understanding these advanced applications clarifies when deep learning’s higher costs and complexity deliver justified returns.

1. Computer vision and image recognition

Deep learning revolutionizes visual data processing, enabling systems to understand, classify, and extract information from images and videos with human-level or superior accuracy.

When used for manufacturing quality inspection, convolutional neural networks (CNNs) can analyze product images to detect defects with exceptional accuracy, surpassing human inspector performance. Semiconductor manufacturers implement CNN-based visual inspection across production lines, identifying microscopic defects invisible to human inspectors.

Potential business impact:

- Improvement in the defect detection rate compared to manual inspection

- Reduction in quality control costs through automation

- Increase in inspection speed through automated processing

- Decrease in product returns through enhanced quality assurance

2. Natural language processing and language models

Natural language processing (NLP) enables machines to understand, process, and generate human language. Large language models (LLMs), built on transformer architectures, are a cutting-edge NLP technology that significantly improves accuracy, context awareness, and scalability. Together, NLP and LLMs are transforming customer service, content creation, and business intelligence.

Intelligent chatbots and virtual assistants leverage natural language processing (NLP) to perform tasks such as dialogue understanding, intent recognition, and context tracking. Large Language Models (LLMs) play a key role by generating coherent, context-aware responses and automatically handling complex conversations.

Potential business impact:

- Reduction in customer support costs through automation

- Decrease in query resolution time from minutes to seconds for automated cases

- Increase in agent productivity by focusing on complex customer issues

- Improvement in customer satisfaction scores

- 24/7 service availability without proportional staffing costs

Call centers deploy speech recognition analyzing thousands of monthly calls, identifying compliance risks, agent performance patterns, and customer pain points that inform training programs and process improvements.

Voice-enabled interfaces use deep learning-based speech recognition to power virtual assistants, enabling hands-free operation, improving accessibility for users with disabilities, and supporting natural interaction paradigms.

Potential business impact:

- Reduction in documentation time through voice dictation

- Automation of meeting transcription

- Improvement in accessibility compliance

3. Recommendation systems and personalization

Deep learning analyzes user behavior patterns, preferences, and contextual signals to deliver highly personalized recommendations that drive engagement and revenue.

E-commerce product recommendations leverage neural collaborative filtering and deep learning models to analyze purchase history, browsing behavior, product attributes, and contextual signals, enabling the delivery of relevant and personalized product suggestions.

Potential business impact:

- Increase in conversion rates

- Improvement in average order value

- Enhancement of customer lifetime value

- Improvement in cross-selling effectiveness

4. Generative AI and creative applications

Generative adversarial networks (GANs) and diffusion models create original content, including images, text, code, and designs, enabling creative automation and synthetic data generation.

Marketing content creation leverages generative models to produce marketing copy, social media content, and design variations, accelerating creative workflows while ensuring brand consistency.

Potential business impact:

- Increase in content production speed

- Reduction in creative iteration cycle time from weeks to hours

- Increase in A/B testing volume

- Reduction in content creation costs

Implementation phases

Understanding the key implementation steps, along with the required resources for development, infrastructure, and maintenance, allows for a more realistic budget planning.

Machine learning projects

Main cost drivers:

- Initial development: typically involves data preparation, model training, and integration efforts. Costs vary based on project complexity and team expertise.

- First-year infrastructure: includes expenses for computing resources, cloud services, and storage solutions.

- Ongoing maintenance: encompasses model monitoring, retraining, and infrastructure scaling to maintain performance over time.

ROI timeline (typical):

| Timeframe | Milestone | Notes |

| Month 1-3 | Setup & data preparation | Initial data collection and preprocessing; early model prototyping |

| Month 3-6 | Early value realization | Deployment of MVP models; initial improvements in efficiency |

| Month 6-9 | Expanded deployment | Broader model deployment; noticeable impact on decision-making |

| Month 9-12 | Break-even point | Operational savings begin to offset initial investments |

| Year 2 | Significant gains | Optimizations and retraining lead to measurable performance boosts |

| Year 3+ | Mature ROI | Full adoption; sustained improvements in efficiency and effectiveness |

ML projects are typically faster to deploy and start delivering value within months, especially when the datasets are small to medium in size and the models are not too complex.

Deep learning projects

Main cost drivers:

- Initial development: involves extensive data collection, model design, and training phases. High computational resources are often required.

- Infrastructure: significant investment in specialized hardware (e.g., GPUs) or cloud-based solutions to handle intensive processing needs.

- Specialized tooling: adoption of advanced frameworks and tools tailored for deep learning tasks.

ROI timeline (typical):

| Timeframe | Milestone | Notes |

| Month 1-3 | Data collection & infrastructure setup | DL requires larger labeled datasets, more preprocessing, and a GPU setup |

| Month 3-6 | Model prototyping | Deployment of MVP mInitial DL models trained; may still require tuning to reach usable accuracy |

| Month 6-9 | Validation & pilot deployment | Testing models in controlled environments; assessing feasibility |

| Month 9-12 | Early production use | Limited deployment; early operational insights |

| Year 2 | Full deployment | Scaling models to full production; stabilization of infrastructure |

| Year 3+ | Mature ROI | Continuous improvements and refinements lead to substantial gains |

Deep learning projects take longer to realize ROI because they need larger datasets, more compute, and longer training/optimization cycles. But they can deliver higher long-term gains, especially for image, video, NLP (LLM), or complex prediction tasks.

Responsible and ethical AI adoption

As machine learning and deep learning systems become embedded in business processes, the potential risks grow in significance. Today, AI is increasingly impacting regulatory compliance, brand trust, ROI, and more. As a result, responsible AI adoption is no longer optional for companies embedding the technology into strategic product and business initiatives.

Understanding AI bias and its business impact

AI bias occurs when machine learning or deep learning models produce systematically inaccurate outcomes for specific groups. This happens not because algorithms are inherently discriminatory, but because they learn patterns from biased historical data or through flawed training processes.

Common sources of bias:

- Historical data bias: training models on past decisions that reflected human prejudices. For instance, a hiring AI trained on 10 years of resume data will learn to replicate historical biases if the company previously favored certain demographics.

- Sampling bias: training data that doesn’t represent the full population that the model is expected to serve. For example, a facial recognition system trained primarily on one ethnicity will perform poorly on others.

- Label bias: human annotators introducing their own biases when labeling training data. What one person considers “professional attire” in images may reflect cultural assumptions.

- Amplification bias: a UCL study found that AI not only learns human biases but also exacerbates them. Models can amplify subtle patterns in training data, making discrimination more extreme than in the original data.

Real-world business consequences:

- Legal exposure: AI bias lawsuits are on the rise. Companies face discrimination claims when automated systems disadvantage protected groups in hiring, lending, housing, or other decisions.

- Regulatory penalties: under the GDPR, companies must explain automated decisions that affect individuals. The EU AI Act, which became enforceable in February 2025 for high-risk systems, imposes strict requirements for bias testing and transparency. Non-compliance can result in fines of up to €35 million or 7% of the company’s global annual revenue.

- Reputational damage: biased AI systems exposed in the media can devastate brand trust. A major tech company’s biased resume screening tool or a bank’s discriminatory loan algorithm becomes a PR crisis within hours.

- Lost revenue opportunities: biased models that perform poorly for segments of your customer base directly impact revenue. According to DataRobot’s research, of organizations experiencing negative impacts from AI bias, 62% reported lost revenue and 61% reported lost customers.

Explainability: making AI decisions transparent

When your ML or DL model denies a loan application or flags a transaction as fraudulent, can you explain why? Increasingly, you must — both legally and practically.

The ML vs DL explainability challenge

Machine learning models, such as linear regression, decision trees, and random forests, offer inherent interpretability. You can trace exactly why a prediction was made, which features mattered most, and how changing inputs would affect outputs.

Deep learning models with millions of parameters function as “black boxes.” Even their creators often can’t explain why a specific prediction was made. This creates serious challenges in regulated industries.

Explainable AI (XAI) techniques for deep learning:

- SHAP (Shapley Additive Explanations) calculates the contribution of each feature to a prediction using game theory concepts, showing which inputs most influenced the model’s decision. This method works with any model type but is computationally intensive.

- LIME (Local Interpretable Model-agnostic Explanations) approximates complex models locally with simpler, interpretable ones. It explains individual predictions by highlighting the features that mattered for that specific case.

- Attention mechanisms enhance transformer models, utilizing attention weights to reveal which parts of the input text or images the model focused on. This method proves exceptionally useful for NLP and computer vision applications.

- Feature visualization is used in convolutional neural networks to illustrate the patterns each layer learned to detect, aiding in understanding the model’s internal representations.

Business implementation approach

For machine learning projects in regulated industries: choose inherently interpretable models (such as linear regression, decision trees, or rule-based systems) even if they sacrifice 2-3% accuracy compared to more complex alternatives.

For deep learning, where high accuracy justifies complexity: implement XAI tools (SHAP/LIME) from project start, not as an afterthought. Budget for explainability infrastructure — expect 15–25% additional development time and costs.

Document model decisions: maintain audit trails showing model version, training data, and decision rationale for high-stakes predictions. This protects against regulatory scrutiny and lawsuits.

Human oversight for critical decisions: keep humans in the loop for decisions that have a significant individual impact. AI recommends, humans decide—especially for lending, hiring, and medical applications.

Compliance: navigating AI regulations

The regulatory landscape for AI has transformed dramatically. What was largely unregulated in 2022 now faces comprehensive frameworks that impose significant compliance burdens.

Key regulatory frameworks:

EU AI Act (effective 2025): The world’s first comprehensive AI regulation categorizes AI systems by risk level:

- Unacceptable risk (banned): social scoring, real-time biometric surveillance in public spaces, exploitative AI

- High risk (strict requirements): AI in hiring, credit decisions, law enforcement, critical infrastructure, medical devices

- Limited risk (transparency obligations): chatbots must disclose they’re AI, and deepfakes must be labeled

- Minimal risk (no restrictions): AI-powered spam filters, inventory management, recommendation engines for entertainment

The GDPR key principles include:

- Right to explanation for automated decisions

- Data minimization — collect only necessary data

- Purpose limitation — use data only for stated purposes

- Accuracy and storage limitation requirements

- Security and confidentiality obligations

Sector-specific regulations:

- Healthcare (HIPAA, FDA): medical AI must meet device approval standards and protect patient data

- Financial services: fair lending laws, credit reporting accuracy, anti-discrimination requirements

- Employment: EEOC guidelines prohibit discriminatory AI in hiring, even if unintentional

Practical compliance steps:

1. Conduct AI inventory: document all ML and DL systems, their purposes, data sources, and risk levels. Many companies lack comprehensive documentation regarding where AI is deployed across their organization.

2. Risk assessment: classify systems under applicable frameworks (EU AI Act risk categories, FDA device classifications, etc.). High-risk systems require extensive compliance measures.

3. Data governance: implement processes ensuring training data quality, documentation, and bias testing. Maintain data lineage showing where training data originated and how it was processed.

4. Bias testing: regularly evaluate models for discriminatory outcomes across demographic groups. Test before deployment and continuously monitor in production. Document testing methodologies and results.

5. Documentation requirements: maintain technical documentation, including model architecture, training data characteristics, performance metrics, intended use, and limitations.

6. Vendor due diligence: if using third-party AI systems or cloud APIs, ensure vendors meet compliance requirements. You remain liable even when using external providers.

7. Budget reality: expect to add 20–35% to project costs for high-risk AI systems to cover bias testing, documentation, audit trails, and legal review.

Building human oversight into AI systems

Fully automated AI decisions create maximum legal and reputational risk. Smart organizations design human oversight into their AI systems from the start.

Oversight models:

- Human-in-the-loop: AI recommends, human intervenes at every decision cycle to refine and correct AI output. In high-stakes decisions like loan approvals, medical diagnoses, or hiring, this model enables companies to enforce accountability while benefiting from AI insights. While reducing throughput, it ensures high quality control standards.

- Human-on-the-loop: AI decides autonomously, human monitors and can intervene if necessary or when prompted by AI. Used for moderate-risk decisions like fraud flagging or content moderation, this approach involves humans in the review of samples and unusual cases, balancing efficiency with oversight.

- Human-in-command: AI provides analysis, human makes all decisions, overseeing the AI’s broader impact and deciding when and how to use it. Used when AI augments human expertise rather than replacing it, this model is common in strategic business decisions where AI provides data insights.

Monitoring and intervention:

- Anomaly detection: flag predictions with low confidence scores or unusual feature patterns for human review. If the model is normally 95% confident, but only 60% confident in a particular case, escalate to humans.

- Regular auditing: review sample predictions monthly or quarterly. Check if model decisions align with business values and ethical principles. Look for model drift when monitoring AI performance and output over time.

- User feedback loops: enable customers or employees to challenge AI decisions. Implement appeals processes. Track patterns in overturned decisions to identify systematic issues.

Future outlook: 2025–2030

The machine learning vs deep learning distinction won’t disappear by 2030, but the line between their practical applications will blur as hybrid architectures, automated development tools, and edge computing transform how organizations deploy AI. Understanding these trends will help you make technology investments that remain relevant as the landscape evolves.

1. Hybrid AI architectures

Until recently, companies had to choose between traditional machine learning for interpretability and deep learning for accuracy and adaptability. The emerging trend is to combine them — integrating decision trees or regression models (for structured data and explainability) with neural networks and generative AI (for creative or unstructured data tasks).

Examples of hybrid innovation:

- Retail: ML models forecast demand; DL-based vision systems track shelf stock; generative AI predicts new product combinations.

- Healthcare: DL reads diagnostic images, while rule-based ML ensures regulatory traceability.

- Manufacturing: predictive maintenance systems mix sensor-based ML with generative anomaly detection for smarter automation.

These hybrid stacks bring the best of both worlds: the interpretability of ML and the sophistication of DL.

2. AutoML and democratization of AI tools

As talent shortages persist, AutoML platforms (like Google Vertex AI or Amazon SageMaker Autopilot) are lowering barriers to entry. Non-specialists can now design, train, and deploy ML/DL models without extensive coding knowledge.

What this means for business:

- Faster prototyping cycles

- Smaller teams can manage larger AI pipelines

- Increased AI literacy across departments, from marketing to operations

Some projections suggest that by 2027, low-code and no-code AutoML tools could power the majority of new AI model development, reflecting a shift toward democratized AI.

3. Edge AI and real-time learning

The next frontier of efficiency lies at the edge — where AI systems process data directly on local devices or sensors instead of centralized cloud servers.

Benefits:

- Real-time responsiveness for critical systems (e.g., autonomous vehicles, industrial robotics, IoT sensors)

- Enhanced data privacy, as sensitive data never leaves the device

- Lower latency and bandwidth costs

According to Fortune Business Insights, the global Edge AI market is projected to grow from $27.01 billion in 2024 to $269.82 billion by 2032. This growth is driven by demand for low-latency processing, improved privacy, and lower bandwidth costs for IoT, robotics, and autonomous systems.

4. Market projections and technology convergence

As boundaries between AI, cloud computing, and data analytics blur, expect the next generation of enterprise systems to be AI-native by design.

Key projections:

- Global AI spending will exceed $4 trillion by 2030.

- Generative AI will contribute up to $4.4 trillion annually to the global economy.

- AI infrastructure will shift toward unified platforms combining ML Ops, DL Ops, and GenAI Ops for end-to-end lifecycle management.

In short, the next era of AI won’t be about choosing one technology over another — but about orchestrating them all into a cohesive, adaptive intelligence fabric.

Executive summary: choosing smartly

If you remember nothing else, remember this: the right AI approach isn’t about complexity — it’s about alignment.

- Start simple: ML delivers strong ROI for most structured business data problems.

- Go deep when necessary: DL shines in tasks involving perception, language, or complex unstructured data.

- Hybrid is the future: many enterprise systems now blend ML’s transparency with DL’s adaptability and generative power.

- Invest in governance: responsible AI design, clear model explainability, and ethical oversight are non-negotiable.

- Think ROI, not hype: the best AI solution is the one that fits your business maturity, data readiness, and strategic priorities — not necessarily the most advanced one.

If you need expert guidance on how to leverage AI to its fullest potential, you can always book a free consultation call with our team.

FAQ

1. What is the difference between LLM, LMM, and RAG?

- LLM (large language model): a deep learning model trained on massive text datasets to understand and generate human-like language (e.g., GPT, Claude). Ideal for summarization, drafting, and conversational AI.

- LMM (large multimodal model): an evolution of LLMs that can process multiple data types — text, images, video, and audio — for use cases like visual search, content moderation, and report generation.

- RAG (retrieval-augmented generation): enhances LLMs by connecting them to external knowledge bases or company data, ensuring more accurate, context-specific answers.

Business takeaway:

Use LLMs for general-purpose language tasks, LMMs for cross-format data intelligence, and RAG for enterprise-grade AI assistants connected to your own data.

2. What are the key ML and DL technologies used in 2025?

Key tools and frameworks include:

- ML frameworks: scikit-learn, XGBoost, LightGBM, and AutoML platforms like Google Vertex AI and DataRobot.

- DL frameworks: TensorFlow, PyTorch, and Keras for neural networks and transformer-based architectures.

- LLMs: GPT-5, Claude, Gemini, and domain-specific LLMs for healthcare, finance, and legal tasks.

- LMMs (systems that combine text, image, and audio understanding): GPT-4V, Gemini 1.5 Pro, and Meta’s LLaMA 3 Vision.

- RAG: combines generative AI with enterprise data search, enabling context-aware chatbots and intelligent assistants.

- Specialized AI tools: MLOps platforms (Weights & Biases, MLflow) and vector databases (Pinecone, Milvus) that enable scalable deployment.

3. Can ML and DL solutions be developed remotely? Does it make business sense?

Yes — remote AI development is now standard practice. With cloud-based tools, distributed teams can train, test, and deploy ML/DL models efficiently.

Benefits include:

- Access to global talent: hire the best AI engineers and data scientists, regardless of geography.

- Cloud scalability: platforms like AWS SageMaker, Azure ML, and Google Vertex AI support distributed training and real-time monitoring.

- Lower costs: reduce infrastructure and staffing overhead by leveraging virtualized environments and managed services.

- Faster delivery: follow-the-sun collaboration shortens project cycles and increases iteration speed.

When it makes sense:

Remote ML/DL development is particularly valuable for startups, mid-size tech companies, and enterprises experimenting with new AI products — as long as you ensure data security, version control, and IP protection.

4. How to choose the right ML/DL development vendor?

Selecting a capable AI partner can significantly impact the success of your project. Look for vendors with:

- Proven expertise — a portfolio of production-grade ML/DL projects and case studies.

- Data security — compliance with GDPR, ISO 27001, and enterprise-grade security practices.

- Domain experience — familiarity with your industry’s data types and challenges.

- Scalability — ability to handle growing data pipelines and integrate with your tech stack.

- Post-deployment support — continuous model retraining, monitoring, and performance tuning.

- Transparent pricing — clear estimates for development, compute, and maintenance.

5. What are the current challenges in ML/DL adoption?

Despite massive progress, businesses still face key barriers in AI implementation:

- Data governance: ensuring clean, unbiased, and privacy-compliant datasets.

- Explainability: interpreting model decisions — especially in regulated industries.

- Compute costs: training deep learning models can require expensive GPU clusters.

- Talent shortages: skilled AI professionals remain in high demand and short supply.

- Integration complexity: embedding AI models into legacy systems or real-time operations.